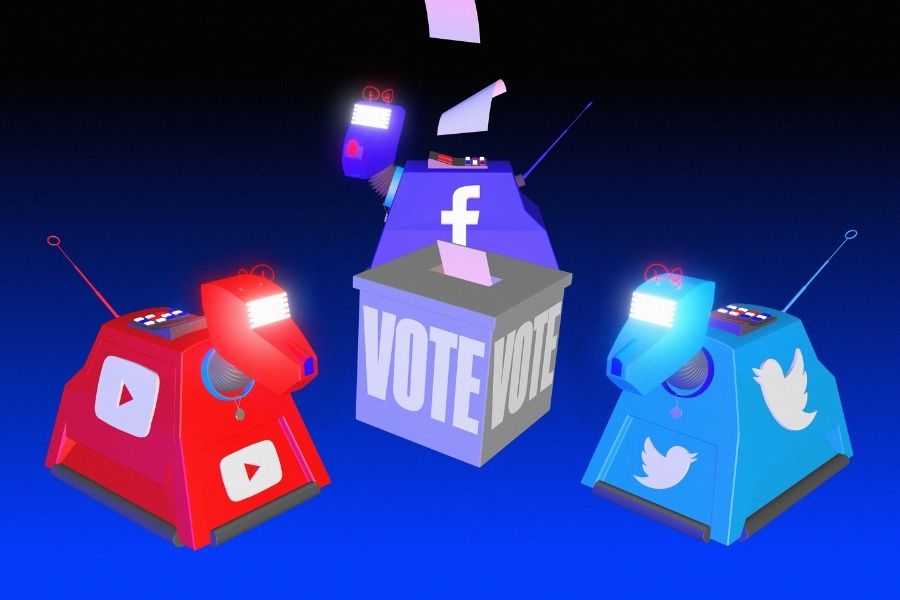

What to expect from Facebook, Twitter and YouTube on US election day

The social media platforms are key conduits for information and communication, and were all misused in the 2016 US elections. Here's how they plan to handle the challenges facing them in the Donald Trump vs Joe Biden contest, on and after November 3

Shira Inbar/The New York Times

Shira Inbar/The New York Times

SAN FRANCISCO — Facebook, YouTube and Twitter were misused by Russians to inflame U.S. voters with divisive messages before the 2016 presidential election. The companies have spent the past four years trying to ensure that this November isn’t a repeat.

They have spent billions of dollars improving their sites’ security, policies and processes. In recent months, with fears rising that violence may break out after the election, the companies have taken numerous steps to clamp down on falsehoods and highlight accurate and verified information.

We asked Facebook, Twitter and YouTube to walk us through what they were, are and will be doing before, on and after Tuesday. Here’s a guide.

Before the election

©2019 New York Times News Service