ChatGPT vs. Hybrids: The future depends on our choices

Imposing the equivalent of a worldwide moratorium on generative artificial intelligence is absurd. But developers might want to pause and reflect on what they hope to achieve before releasing AI applications to the public

On 29 March, some 1,000 AI funders, engineers and academics issued an open letter calling for an immediate pause on further development of certain forms of generative AI applications, especially those similar in scope to ChatGPT

Image: Shutterstock

On 29 March, some 1,000 AI funders, engineers and academics issued an open letter calling for an immediate pause on further development of certain forms of generative AI applications, especially those similar in scope to ChatGPT

Image: Shutterstock

On 29 March, some 1,000 AI funders, engineers and academics issued an open letter calling for an immediate pause on further development of certain forms of generative AI applications, especially those similar in scope to ChatGPT. With irony, some of the letter’s signatories are engineers employed by the largest firms investing in artificial intelligence, including Amazon, DeepMind, Google, Meta and Microsoft.

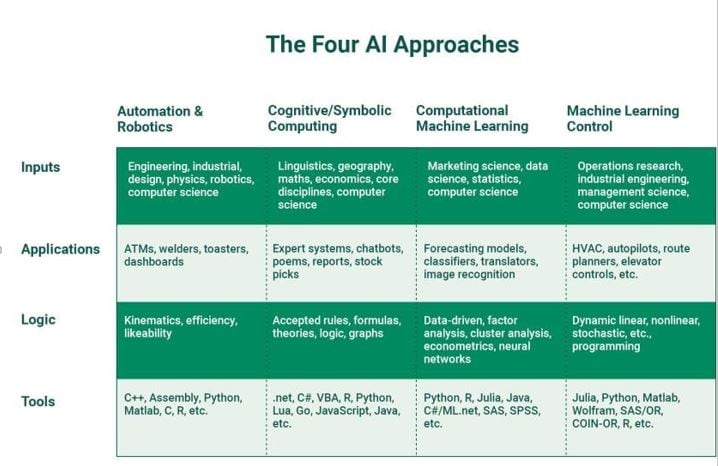

This call for reflection came in the wake of overwhelming media coverage of ChatGPT since the chatbot’s launch in November. Unfortunately, the media often fails to distinguish between generative methodologies and artificial intelligence in general. While various forms of AI can be dated back to before the Industrial Revolution, the decreasing cost of computing and the use of public domain software have given rise to more complex methods, especially neural network approaches. There are many challenges facing these generative AI methodologies, but also many possibilities in terms of the way we (humans) work, learn or access information.

INSEAD’s TotoGEO AI lab has been applying various generative methodologies to business research, scientific research, educational materials and online searches. Formats have included books, reports, poetry, videos, images, 3D games and fully scaled websites.

In this article and others to follow, I will explain various generative AI approaches, how they are useful, and where they can go wrong, using examples from diverse sectors such as education and business forecasting. Many of the examples are drawn from our lab’s experience.

Decoding the AI jargon

AI jargon can be overwhelming, so bear with me. Wikipedia defines generative AI as “a type of AI system capable of generating text, images, or other media in response to prompts. Generative AI systems use generative models such as large language models to statistically sample new data based on the training data set that was used to create them.” Note the phrase “such as”. This is where things get messy.

[This article is republished courtesy of INSEAD Knowledge, the portal to the latest business insights and views of The Business School of the World. Copyright INSEAD 2024]