Pragmatic Risk Management In A Tightly-Coupled World

Supply chain managers must face the fact that their job descriptions now include disaster preparedness, which is an especially daunting task as global operations become more complex

One of the most publicized corporate failures in recent memory was the bankruptcy of General Motors in mid-2009, when the icon of American industry filed for Chapter 11 after years of mismanagement. But was mismanagement the root cause?

If truth be told, the bankruptcy of GM stems from a web of causality. Already struggling with crippling pension obligations and intransigent unions, the embattled automaker became prey to external forces. In 2008, Detroit’s long love affair with large pickups and SUVs (whose 15-20 per cent profit margins were far more attractive than regular car margins of three per cent) became a risk. As gas prices soared above US$4 a gallon, many cost-sensitive North American consumers simply stopped buying oversized vehicles. Anyone who still wanted an SUV, of course, found it more difficult to secure a loan. Prior to the financial crisis, it was common practice for consumers to use home equity loans to purchase vehicles and other big-ticket items. But as the value of real estate around the world collapsed, this source of financing dried up. Meanwhile, as job markets, stock markets and retirement funds fell in unison, the purchase of a new car, even a small one, became less and less feasible for most consumers. As a result of this perfect storm, GM’s sales plummeted. The rest, as they say, is history.

Although the effect of oil price on demand may have been something GM’s risk management department understood, the U.S. housing market was not likely on the top of their radar screen until the financial crisis reared its ugly head. Could anyone have foreseen how the Lehman Brothers collapse would impact the financial health of GM? This leads to a broader question: could traditional management tools have been adequate to fend off this perfect storm, with its multiple, unsuspected and simultaneous hazards?

In March 2011, Japan was hit by an earthquake. It was followed by a tsunami, which in turn created one of the worst nuclear disasters in recent history. Because it remains the historical location of countless component manufacturers, the disruption of global supply chains was considerable. And as fate would have it, companies like Honda that had facilities in Japan and Thailand were actually struck by another disaster barely five months later, when tropical storm Nock-ten swept a deluge up to Bangkok’s city limits, turning 65 of Thailand’s 77 provinces into flood disaster zones and causing an estimated US$47.5 billion in economic damage.

Thailand’s development plans had strategically promoted the design of clusters. As a consequence, a full third of global hard disk drive production had become concentrated in the cost-competitive Asian country. When disaster struck, the synergies they had shared became shared risk, as seven major industrial estates were submerged under three metres of water. Many of the 1,000-odd companies submerged in Thailand in 2011 had supply chain risk management programs in place. None of these anticipated the scale of the “worst-case scenario” that actually came to pass.

Although earthquakes, tsunamis and tropical storms are not necessarily correlated with one another, climate change implies that what insurance companies call a “force majeure” will become a routine occurrence. As a result, supply chain managers must face the fact that their job descriptions now include disaster preparedness, which is an especially daunting task as global operations become more complex. This article looks at how to address that issue.

RISK MANAGEMENT IN GLOBAL SUPPLY CHAINS

Globalization has created new opportunities and new threats. As sourcing from around the world made supply chains longer and more complex, the volatility inherent in production significantly increased. The number of supply chain members and the interactions among them has grown, exacerbating the lack of transparency in the operating environment. Company executives have increased profitability through ever-shorter times-to-market and product life-cycles, business processes improvement, just-in-sequence manufacturing, or vendor-managed inventory. But the technological progress, strategic partnerships and vertical integration that drove cost reductions also added to the supply chain’s vulnerability. Indeed, global supply chain (SC) networks are exposed to a number of potential threats, which themselves are constantly evolving.

SC networks are dynamic complex systems. They are not static. The structures evolve. Local disruptions typically propagate and lead to production shortages and contagion (Helbing et al. 2004). We can identify the most important nodes in the network by measuring connectivity and embeddedness. But with research on logistics clusters gaining influence, it is important to draw attention to the fact that clusters create both synergies and shared risk, a reality not adequately addressed by current discussion.

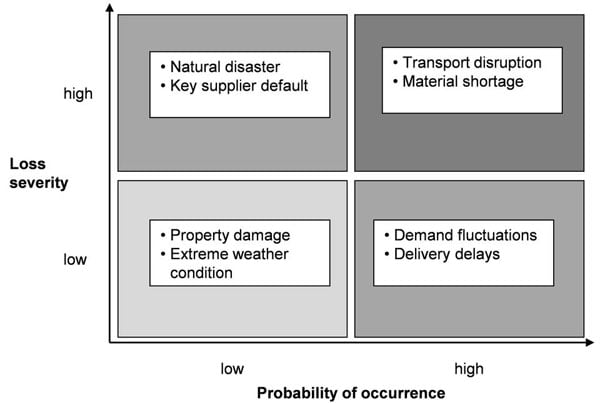

The average risk management process typically begins by creating basic awareness. The use of heat maps (see Figure 1), which are two-dimensional representations of scenarios and consequences, is widespread. These snapshots of impact and response are presented in a quadrant format, which are easy to construct and easy to explain to executives. By ranking the intersections of likelihood and impact, managers can prioritize contingency plans, deciding how to react (or not react) to conceivable disruptions.

FIGURE 1

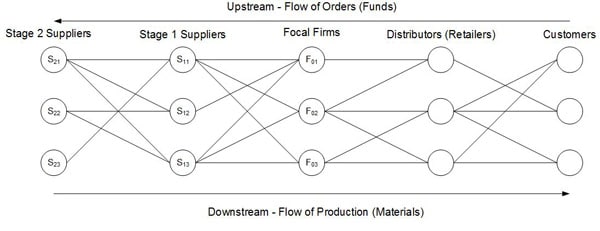

But think back to the failure of GM and ask yourself what happens when more than two dimensions are in play? The industrial world is routinely subject to undetected dependencies and the contagion effects. In the simplest of supply chains (retailer-wholesaler-distributor-factory), the bullwhip effect is triggered by the tiniest of disturbances and wreaks havoc as it propagates downstream. From any one vantage point within the system, it is hard to see the wave of destruction coming, not to mention react appropriately (see Figure 2). But what we increasingly observe is not merely the cost of unpreparedness for the “unknown.” Like the drunk searching for lost keys in the circle illuminated by a street lamp, we tend to narrow the scope of our actions to what is visible and conceivable. Indeed, the global operations of industry have become much more than four nodes, yet managers have progressively simplified their decision-support tools, partly due to communication issues. And when it comes to risk management, the consequences of oversimplification can be devastating.

FIGURE 2

ADDRESSING COMPLEXITY

So how can we pragmatically manage risk in complex networks? To start, hidden relationships between firms must be brought to light. Lehman Brothers analyzed risk from banks that sold it mortgage-backed securities. But Lehman was also exposed to the default risk of low-income mortgage-holders. This isn’t just an issue for the financial sector. In manufacturing, risk assessments are also frequently applied only to first-tier suppliers. In fact, procurement teams often don’t even know how many tiers of suppliers are hidden behind direct partners. It is hard to blame managers for this. When it comes to producing things like cars and heavy equipment, the fan-out of suppliers is staggering, and virtually impossible to monitor individually. Nevertheless, all original equipment manufacturers (OEMs) are fully exposed to the risks lurking upstream.

Hazardous events can strike several firms simultaneously like in Thailand or Japan, so we distinguish between two forms of dependencies:

- Structural within industries (through dynamically changing business relationships)

- Events that hit groups of firms independently or all at the same time

Our research generalizes the behavior of dependent risk profiles within complex networks of any type. It is obviously difficult to follow abstract flows like money. However, the flow of physical goods can be easily modeled, so we studied the behaviors of dependencies in industrial SC networks under the assumption that captured insights would be transferable to financial networks.

A NEW MODEL OF RISK MANAGEMENT

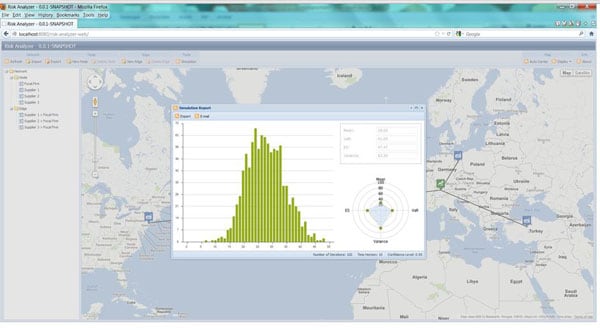

To resolve the dilemma created by a manager’s need to simplify and the fact that two-dimensional maps of network risk do not depict reality, we opened the toolbox of natural science. Without going into mathematical detail, this article will trace the development of a useful heuristic, in which a network model was progressively refined to depict interconnected activity in an industrial system observed over time.

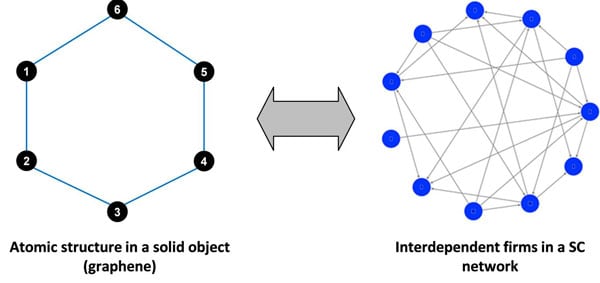

We knew that the condition of the whole was the result of the activity of its tiniest components, and their interactions with one another. Drawing upon physics, the research compared supply chain behavior to the molecules in a solid object.

FIGURE 3

In systems of particles, collective behavior can be observed at the molecular level, but an even distribution of interlinked nodes in a network was unrealistic. Depending on the purchasing power (transactional volume) of companies which do business with one another, it became clear that the intensity of interaction between firms would vary, and that their business relationships occasionally shifted. Extending the metaphor, if the interactions between the particles/firms occur with variable intensity, the effect of individual particles/firms upon one other will be stronger or weaker. At this point, complex network theory, which parameterizes interaction, was added to the model. Firms in a tightly-coupled network interact and react to one another, causing information to flow between active users perpetually, but with varying intensities. To use current vernacular, the flow occasionally “goes viral.” Firms also constantly search for new business opportunities, and switch to alternative suppliers for strategic reasons.

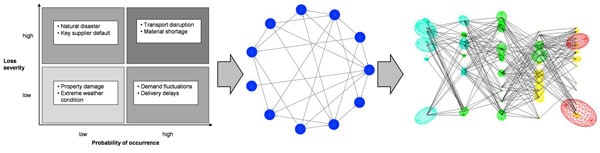

In a subsequent refinement, the model took into account that flows of goods and information in a network do not move smoothly, but are naturally uncertain. Events in most systems arrive variably and are irregularly distributed. Indeed, at each stage of any of the processes, unexpected things happen. For instance, a machine breaks down, labour goes on strike, a tornado hits the town, all of which are plausible, and happen from time to time. These events do not, however, occur at a predictable rate, but strike in a random way. This means that we cannot assume that by the end of any week at least one of these types of disruption will occur. Instead, there could realistically be three events in a week, followed by periods of quiet, then more bad news. Furthermore, the impact of each event will vary widely. Some will lead to catastrophe, others will fall within the range of manageable divergence from expectations (a little less produced than planned, but enough to satisfy the customer and keep cash flowing). In this way, the simulation improved progressively, developing from an initial, static picture, in which events occur regularly, and in sequence, to one in which events propagate throughout the system (Figure 4). We mathematically described the random arrival of disruptions, in a pattern that is comparable to the occurrence of earthquakes in Italy, or the arrival of customers in a queue, or the nuclear decay of radioactive material.

THE PICTURE THAT EMERGED FROM THE MODEL

The traditionally static models of enterprise risk were replaced by our dynamic model (Figure 4). Instead of using a snapshot of one disruption to represent the firm’s risk exposure (often extrapolated over months), we added the dynamic uncertainty of the disruption, then adding the propagation effects through the entire network. The model went live by simulating what was known, that is, the system’s most familiar, stable behaviour. This focused on a number of different firms which were all doing business with the same product or product type. They did not look connected at first glance, because they had diverse geographic locations, scales, numbers of employees, and were in different tiers of the SC network. Once the list of diverse firms (depicting a supply market), was assembled, the model began to simulate the simplest forms of uncertainty: swings in performance (like production yield or delivery shortages). These could lead to chains of bankruptcies not only in their own suppliers, but in the customers they supplied (Mizgier et al. 2012). Then, the research began to explore what would happen when disaster struck. To simulate this, the scientist tinkers with the parameters which represent the features of “worst case” scenarios. This relaxed some of the initial boundary conditions, like letting further disruptions hit during the recovery time (again something managers rarely anticipate). As a result, the model demonstrated much higher losses than would have occurred had events happened only rarely. For example, if in a plant which had just been renovated after a fire an important machine breaks down, the total losses are actually randomly prolonged. This, in turn, leads to much higher accumulated damages than are predicted by simple models (like heat maps).

The simulation made clear that it is simply not good enough to look at individual profiles of disruptions, because – as Japan and Thailand made too clear – they may actually come in “multi-packs.” The most useful results for risk managers were achieved by asking what would happen under various circumstances, such as:

- What is the impact if we move all suppliers to one location? What if we choose suppliers to diversify our supplier base across multiple regions?

- What is the impact if we redistribute the purchasing volumes among the supplier base?

- Which supplier contributes most to the total loss?

Another revelation (that went against all common sense) was the fact that backup suppliers do not necessarily mitigate single source risk. There can be several root causes for this. A multi-sourcing strategy in the second tier will not protect an OEM if there is an undetected single source embedded further up the chain. If the backup supplier is also brought down by the same raw-material supplier that caused the first supplier to default, little is gained.

TAKING PRAGMATIC ACTION

A model is never “the answer” to any manager’s question. Nor can it ever be a full depiction of reality. It can, however, be useful, especially when the tools it replaces are oversimplified or misleading. One of the key outcomes of our scientific expansion of the management toolbox is to go beyond two-dimensional heuristics.

The history of supply chain management is all about loading our models more fully, be they our understanding of cost or uncertainty. In fact, the optimization logic should move away from cost-cutting to improving overall productivity over long periods of time. The interdisciplinary view taken by the model identifies potential culprits and localizes problems early. This kind of tool helps the decision-maker to mature from drawing flat scenarios to generating multi-dimensional landscapes of events.

Whether GM could have anticipated its perfect storm is beyond conjecture because even the most realistic model depends heavily upon data and managerial judgment. However, given the popularity of industry clustering, the HD industry in Thailand should have recognized that its geographic concentration was creating vulnerability. Even managers with limited access to data will find that public sources like iSuppli are more than adequate for analysis, and affordable at that. Bottlenecks become visible because their consequences are realistically calculated.

Assuring the highest possible data quality is the most important step in the modeling process. In spite of the realistic approach, our risk model could not aggregate all effects into a single, elegant representation. This was because not every firm reacts in the same way to threat, be it acute or pending, but each has its own culture of decision-making, authority structures, and gatekeepers. The model thus must be implemented to mimic this range of real behaviors, and this is only possible with data which captures these phenomena. Another recommendation is to not look at businesses separately but to develop an enterprise-wide view of the total risk exposure. This will obviously improve the quality of bottleneck and concentration diagnoses. Collecting the data will be a time-consuming process with no short-term ROI, but as crisis drives change, and regulators become more exacting, the value of the effort will soon become apparent.

Reprint from Ivey Business Journal

[© Reprinted and used by permission of the Ivey Business School]