Return on AI: Why Nvidia's Q2 results are so keenly anticipated

The numbers, and commentary from CEO Jensen Huang will be microscopically examined for a sense of just how much AI is expected to grow further

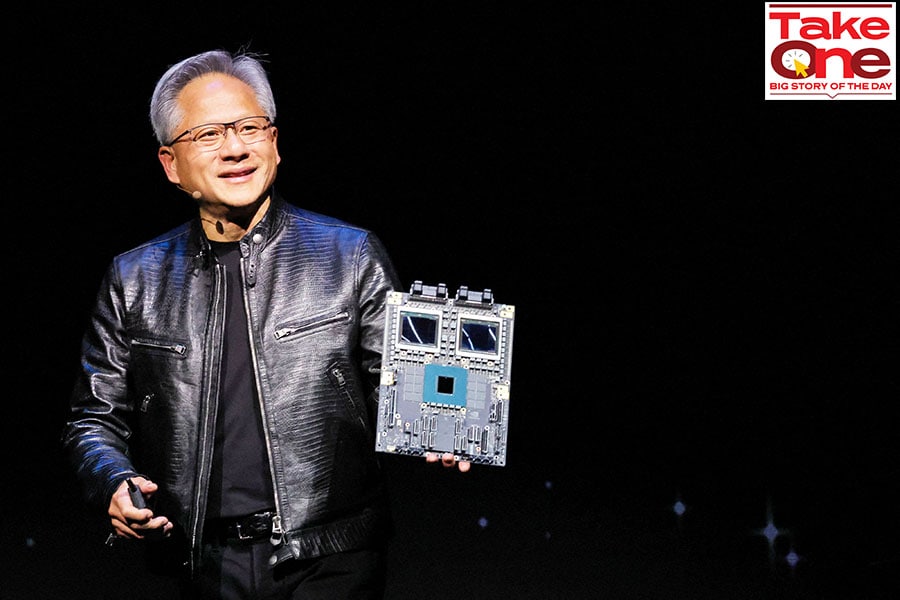

(File) Nvidia CEO Jensen Huang present NVIDIA Blackwell platform at an event ahead of the COMPUTEX forum, in Taipei, Taiwan June 2, 2024.

Image: REUTERS/Ann Wang

(File) Nvidia CEO Jensen Huang present NVIDIA Blackwell platform at an event ahead of the COMPUTEX forum, in Taipei, Taiwan June 2, 2024.

Image: REUTERS/Ann Wang

Nvidia Corp, which rubs shoulders with Apple and Microsoft as the most valuable public companies in the world, reports its fiscal second quarter results later today in the US (August 29, 2.30 am in India).

While capital markets investors are eager to find out how much more of an upside there is likely to be on the stock, the results and commentary from Co-Founder and CEO Jensen Huang may also give us a sense of what’s next in the world of large language models (LLMs) based generative artificial intelligence.

Nvidia’s data centre revenues have gone from $2.37 billion for Q2 of fiscal year 2022 (Nvidia follows a Feb-Jan financial calendar) to $10.32 billion in Q2 of FY24. In Q1 this year, that figure was $22.6 billion. The company’s projection for Q2 FY25 total revenue is $28 billion, most of which will come from sales to the hyperscalers and other data centre customers, while the gaming segment, which has declined, is a distant second.

The dominance of Nvidia’s chips in the data centres that are powering the world’s biggest AI models has led commentators on financial platforms such as Bloomberg to describe the upcoming earnings as a “macro event”.

“Part of it is, Nvidia is the face of AI at this point, or one of the faces of AI,” Alvin Nguyen, senior analyst at Forrester Research, said in an interview with Forbes India on August 24. “That's part of being essentially number one by a large dominant margin.”

Neil Shah, vice president of research at Counterpoint Technology Market Research, echoes this: Nvidia, for now, “is the only show in town” when it comes to providing these high-end processors, accelerators as they are called, Shah said in an interview with Forbes India on August 24.

The deciding factor has been that Nvidia also developed a very sophisticated software platform that makes it easy for developers to use its chips and build powerful applications. Its gaming heritage helped, and Huang also led timely acquisitions to add heft and depth to the platform.

Over the last two to three years, the world has witnessed the rise of ChatGPT and the underlying LLMs that power it and other such generative AI models. This has propelled much of the AI industry, taking many companies by surprise, Shah said, with the power of LLMs simplifying and automating a plethora of tasks.

Training such models with higher accuracy required heavy duty compute power, in addition to good data. “In running just one epoch, which could involve billions of parameters, it takes like hundreds of millions of dollars,” Shah says. An “epoch” in this context refers to one full pass-through of a data set in training an AI model.

Also read: Will Nvidia's AI gold rush continue?

“Just to train it once. And then inferencing and other costs are different. The amount of energy required, of compute required is enormous,” he said. In addition to the hyperscalers, companies such as Open AI, Mistral are developing these models, seeking to sell their capabilities as a service to large businesses, so their app developers can integrate those features into their end-user applications.

In all of this, Nvidia has emerged as the one provider of the processors needed for all the compute. The competitive edge, however, was that “they had an entire ecosystem of GPUs, which could do all the training as well as inferencing effortlessly”, Shah added. “Silicon is one thing. Even AMD can make silicon, Intel can make silicon, Qualcomm can make silicon. But how do you optimise the entire software stack and make it easy for developers to train those models efficiently?”

That is what Nvidia had been doing for GPU or for gamers for almost 20 years now, he said, which is an important reason for their success. They translated that knowhow to the AI processing arena. Therefore, Nvidia offers this singular combination of hardware and software that can be scaled to any application that needs GPUs, with the help of their software called CUDA (Compute Unified Device Architecture).

CUDA is a parallel computing platform and programming model developed by Nvidia. It allows developers to use Nvidia GPUs (Graphics Processing Units) for general-purpose computing tasks, not just for rendering graphics.

“That has been their secret sauce,” Shah said. And in addition to the software, Nvidia has developed the networking tech as well, because the AI models need high bandwidth networking to run quickly from the cloud.

In the longer term, companies such as AMD and Intel are scrambling to catch up and gain market share, and the hyperscalers would encourage them in that effort so as to reduce and de-risk their dependence on Nvidia. They are also developing their own chips, such as Google’s Tensor processors.

On August 19, AMD announced it was acquiring ZT Systems, a hyperscale systems design and original device maker for GPU rack systems, in a $4.9 billion transaction. This is expected to help AMD bolster and develop further its own AI GPU systems software.

And at Intel, “they've bought ASML's best equipment. And I think Samsung just got access to it, but Intel has a year headstart with what should be the best,” Nguyen, at Forrester, points out. He’s referring to the machines needed to make the very high-end semiconductor processors supplied by the company ASML.

There are important development milestones to cross here, but Intel might be on its way, he said. And so long as the interest and innovation on LLMs-based AI application continues, the demand will correspondingly remain high from the hyperscalers and others for the GPUs that can run those models and applications, he said.

That’s the big if. Because innovation can come from anywhere, and in unexpected areas, just as Nvidia’s rise shows. For example, just as software plays a central role in making Nvidia’s GPUs easy to use for AI, new software solutions could come up that allow less powerful processors from other vendors to be harnessed to deliver the same results.

Getting back to Nvidia’s earnings, “the financials, the fundamentals should look great,” Nguyen said. “In the short term, Nvidia is very well positioned.”