Will Nvidia's AI gold rush continue?

The meteoric rise of the California-based chipmaker's valuation has brought flashbacks from the dot-com era when the internet was booming like AI is in today's day

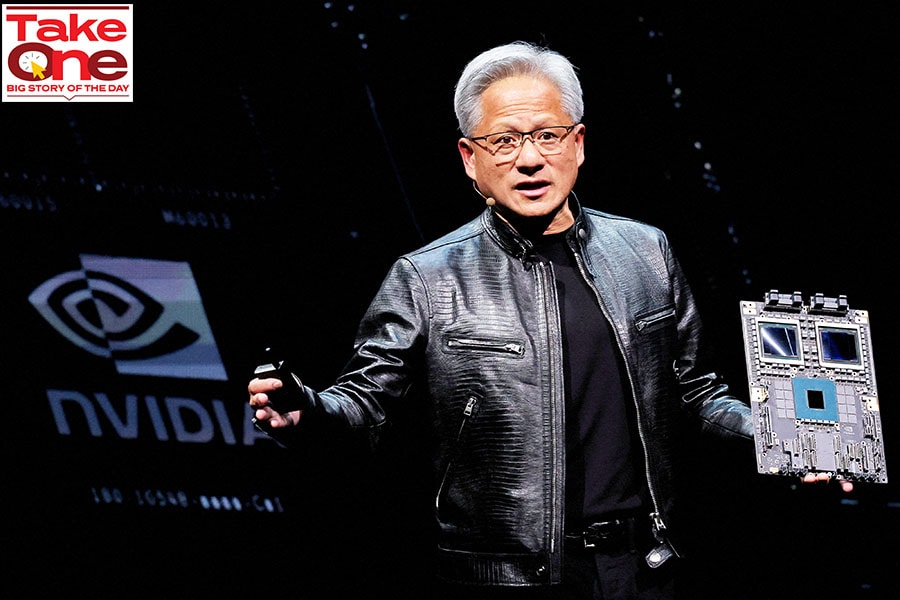

Nvidia CEO Jensen Huang presents their Blackwell platform at an event ahead of the COMPUTEX forum, in Taipei, Taiwan June 2, 2024. Image: Reuters/Ann Wang

Nvidia CEO Jensen Huang presents their Blackwell platform at an event ahead of the COMPUTEX forum, in Taipei, Taiwan June 2, 2024. Image: Reuters/Ann Wang