In India, 81.9 percent content actioned on Facebook is spam

Facebook's interim transparency report for India, released after Google and Koo, states that country-level granular data is less reliable and is 'directional best estimate'

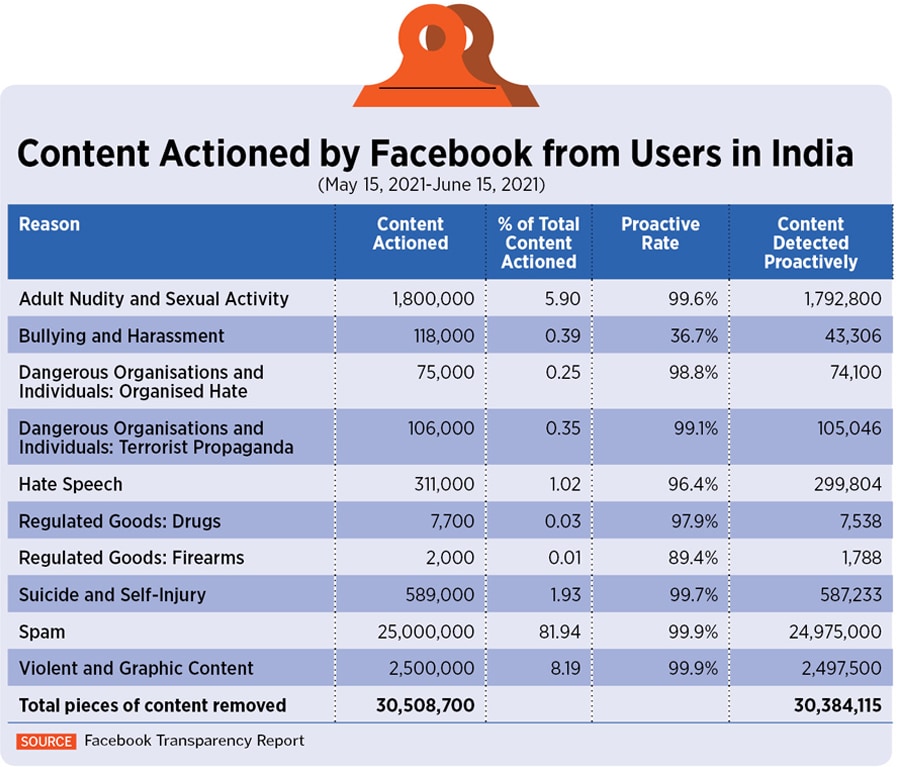

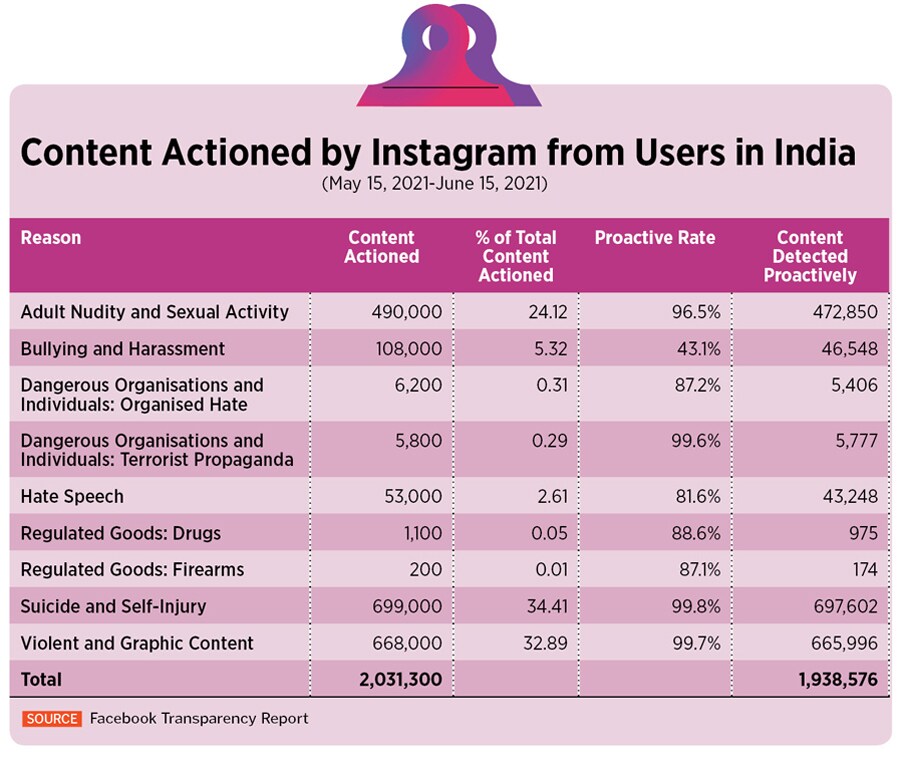

Of the 30.5 million pieces of content by users in India that Facebook actioned between May 15 and June 15, 81.9 percent was spam. This was followed by violent and graphic content which accounted for 8.2 percent of all content actioned. On Instagram, on the other hand, of the 2.03 million pieces of content that were removed, 34.4 percent were actioned for showing suicide and self-injury followed by 32.9 percent posts that were actioned for showing violence and graphic content.

Actions on content (which includes posts, photos, videos or comments) include content removals, or covering disturbing videos and photos with a warning, or even disabling accounts. Problematically, Facebook has not given the total number of pieces of content reported by users, or detected by algorithms, thereby making it harder to assess the scale at which problematic content is flagged (if not actioned) on Facebook.

Facebook released its interim report for the period from May 15 to June 15, 2021 at 7 pm on July 2, about five weeks after the deadline for compliance with the Intermediary Rules lapsed. The final report, with data from WhatsApp, will be released on July 15. Apart from Google, Facebook and Koo, no other social media company, including Indian entities such as MyGov and ShareChat have published their transparency reports.

Facebook actions content along 10 categories on its main platform while on Instagram, it actions along nine categories. The only point of difference is spam. As per the report, Facebook is working on “new methods” to measure and report spam on Instagram.

Does Facebook fare better than Instagram at automatically detecting problematic content?