AI and human ethics: On a collision course

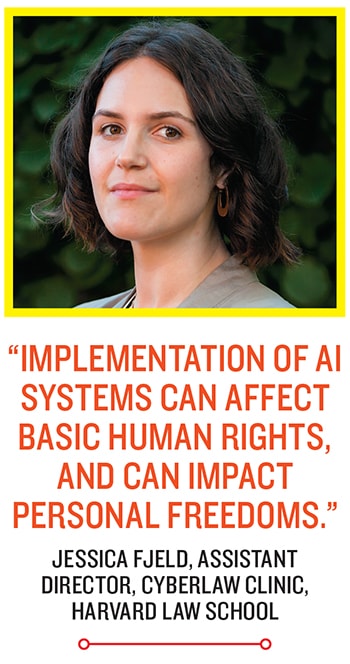

AI systems can give rise to issues related to discrimination, personal freedoms, and accountability, but there can be ways to correct them

AI systems can give rise to issues related to discrimination, personal freedoms, and accountability

AI systems can give rise to issues related to discrimination, personal freedoms, and accountability

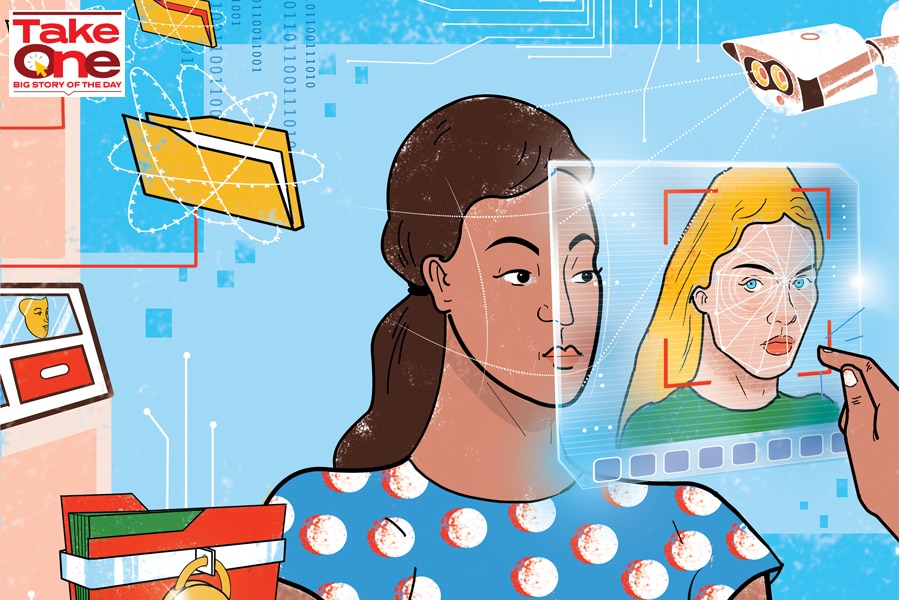

Illustration: Chaitanya Surpur

As the use of artificial intelligence (AI) becomes increasingly popular among private companies and government agencies, there are growing concerns over a plethora of ethical issues rising from its use. These concerns range from various kinds of biases, including those based on race and gender, transparency, privacy and personal freedoms. There are still more concerns related to the gathering, storing, security, usage and governance of data—data being the founding block on which an AI system is built.

To better understand the root of these issues, we must, therefore, look at these founding blocks of AI. Let’s look at mechanisms to predict weather, to see how data helps. If, today, there are accurate systems to predict a cyclone that is forming over an ocean, it is because these systems have been fed data about various weather parameters gathered over many decades. The volume and range of this data enables the system to make increasingly precise predictions about the speed at which the cyclone is moving, when and where it is expected to make landfall, the velocity of wind and the volume of rain. If there was inadequate or poor quality data to begin with, the prediction mechanism could not be built.

Similarly, in AI systems, algorithms—the set of steps that a computer follows to solve a problem—are fed data, in order to solve problems. The solution that the algorithm will come up with depends solely on the data it has received; it does not, cannot, consider possibilities outside of that fixed dataset. So if an algorithm receives data only about white, middle-aged men who may or may not develop diabetes, it does not even know of the existence of non-white, young women who might also develop diabetes. Now imagine if such an AI system was developed in the US or in China, and was deployed in India to predict the rate of diabetes in any city or state.

“We get training datasets, and we try to learn from them and try to make inferences from it about the future,” says Carsten Maple, professor of Cyber Systems Engineering at the University of Warwick’s Cyber Security Centre (CSC), and a Fellow of the Alan Turing Institute in the UK. “The problem is, when we get this training data, we don’t know how representative it is of the whole set. So, if all we saw are white faces and think we have a complete set, then the machine will also see the same thing. This is what Timnit [Gebru, a computer scientist who quit her position as technical co-lead of the Ethical Artificial Intelligence Team at Google last December] had pointed out. Companies and governments should be able to say whether or not the datasets are representative.”